Self-Consuming Generative Models Go MAD

http://arxiv.org/abs/2307.01850

Sina Alemohammad, Josue Casco-Rodriguez, Lorenzo Luzi, Ahmed Imtiaz Humayun,

Hossein Babaei, Daniel LeJeune, Ali Siahkoohi, Richard G. Baraniuk

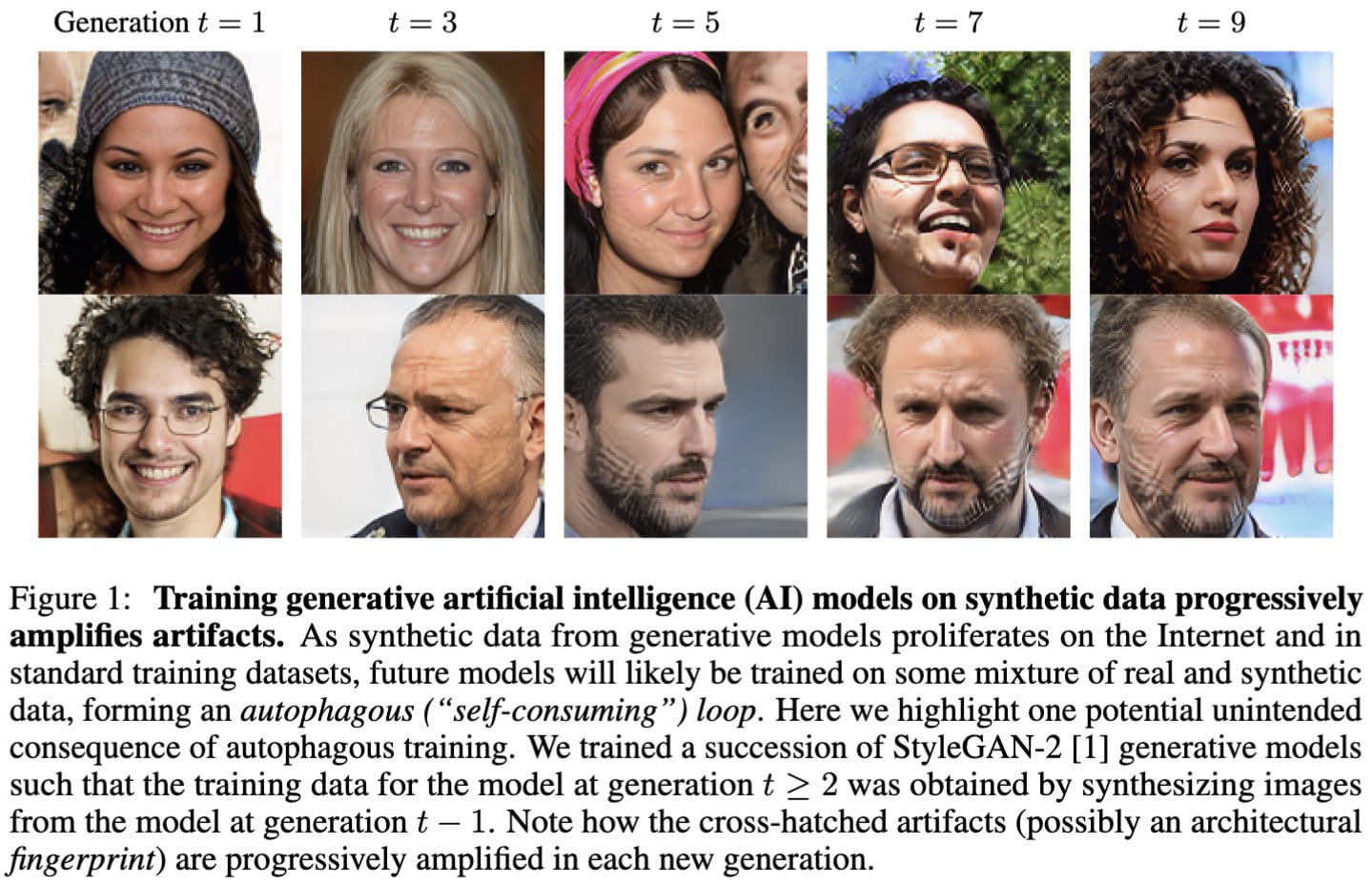

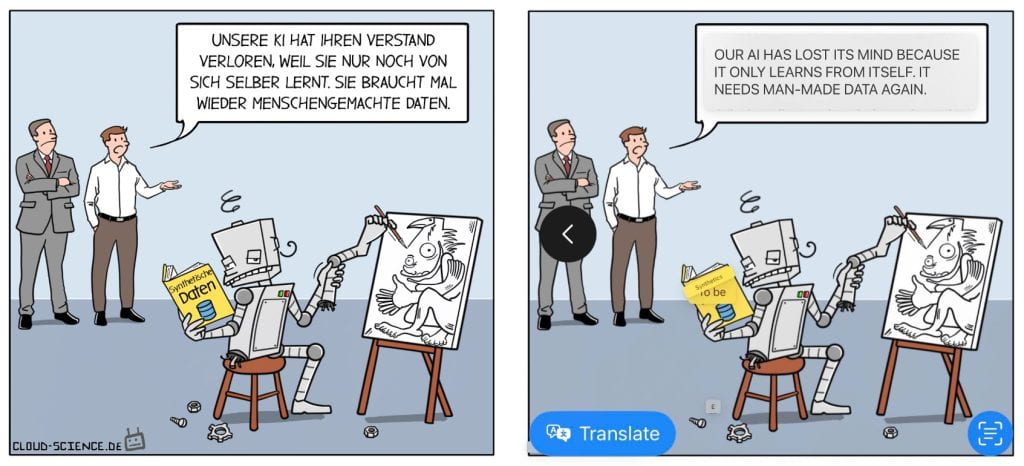

Abstract: Seismic advances in generative AI algorithms for imagery, text, and other data types has led to the temptation to use synthetic data to train next-generation models. Repeating this process creates an autophagous ("self-consuming") loop whose properties are poorly understood. We conduct a thorough analytical and empirical analysis using state-of-the-art generative image models of three families of autophagous loops that differ in how fixed or fresh real training data is available through the generations of training and in whether the samples from previous-generation models have been biased to trade off data quality versus diversity. Our primary conclusion across all scenarios is that without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease. We term this condition Model Autophagy Disorder (MAD), making analogy to mad cow disease.

In the news:

- "Generative AI Goes 'MAD' When Trained on AI-Created Data Over Five Times," Tom's Hardware, 12 July 2023

- "AI Loses Its Mind After Being Trained on AI-Generated Data," Futurism, 12 July 2023

- "Scientists make AI go crazy by feeding it AI-generated content," TweakTown, 13 July 2023

- "AI models trained on AI-generated data experience Model Autophagy Disorder (MAD) after approximately five training cycles," Multiplatform.AI, 13 July 2023

- "AIs trained on AI-generated images produce glitches and blurs,” NewScientist, 18 July 2023

- "Training AI With Outputs of Generative AI Is Mad" CDOtrends, 19 July 2023

- "When AI Is Trained on AI-Generated Data, Strange Things Start to Happen" Futurism, 1 August 2023

- "Mad AI risks destroying the Information Age" The Telegraph, 1 February 2024

- ''AI's 'mad cow disease' problem tramples into earnings season'', Yahoo!finance, 12 April 2024

- "Cesspool of AI crap or smash hit? LinkedIn’s AI-powered Collaborative Articles offer a sobering peek at the future of content'' Fortune, 18 April 2024

- "AI's Mad Loops," Rice Magazine, February 2025

"A Blessing of Dimensionality in Membership Inference through Regularization" by DSP group members

"A Blessing of Dimensionality in Membership Inference through Regularization" by DSP group members

Richard Baraniuk has been selected for the

Richard Baraniuk has been selected for the