Self-Improving Diffusion Models with Synthetic Data

Sina Alemohammad, Ahmed Imtiaz Humayun, Richard Baraniuk

Rice University

Shruti Agarwal, John Collomosse

Adobe Research

arxiv.org/abs/2408.16333, 30 August 2024

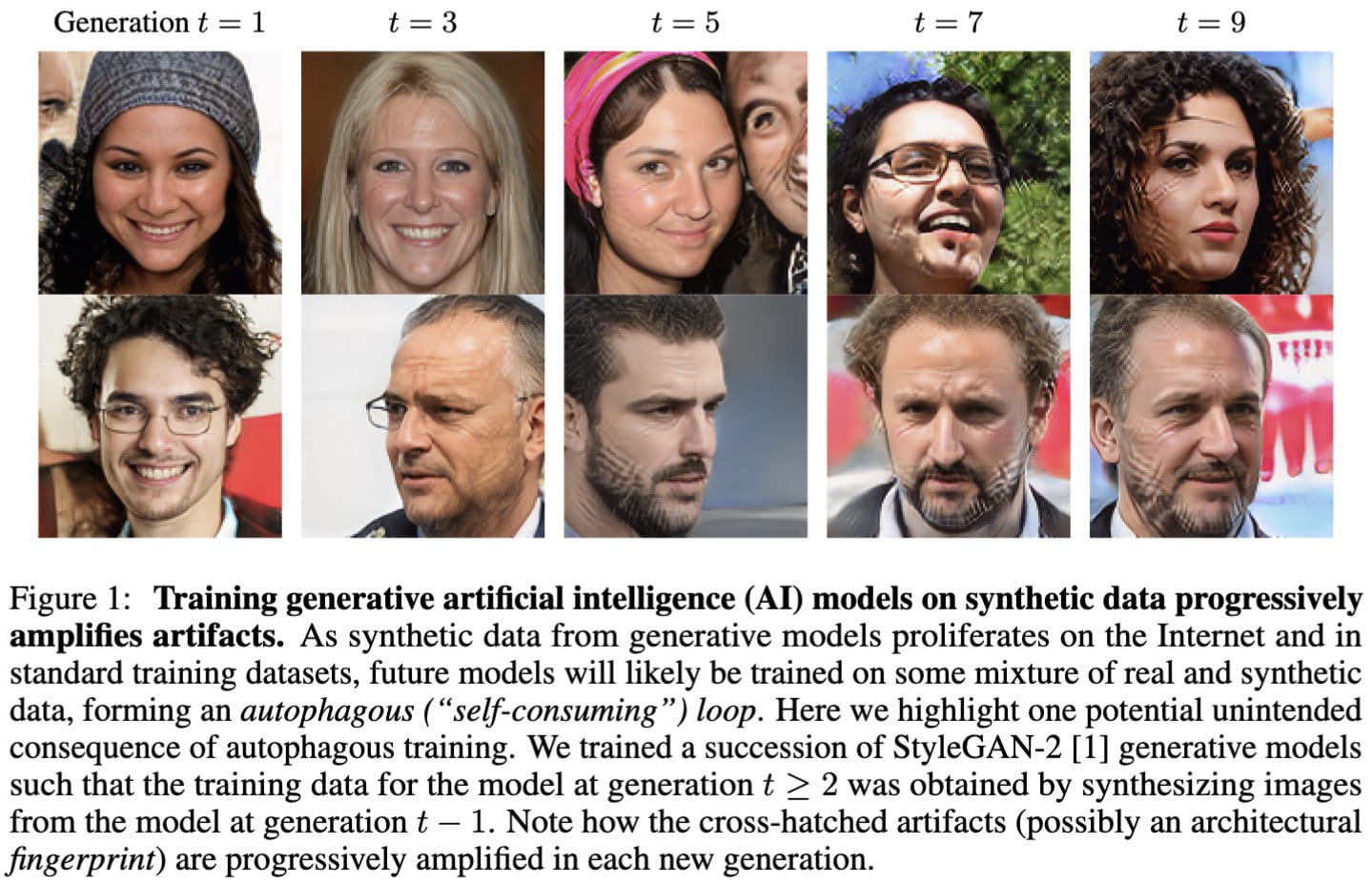

Abstract: The artificial intelligence (AI) world is running out of real data for training increasingly large generative models, resulting in accelerating pressure to train on synthetic data. Unfortunately, training new generative models with synthetic data from current or past generation models creates an autophagous (self-consuming) loop that degrades the quality and/or diversity of the synthetic data in what has been termed model autophagy disorder (MAD) and model collapse. Current thinking around model autophagy recommends that synthetic data is to be avoided for model training lest the system deteriorate into MADness. In this paper, we take a different tack that treats synthetic data differently from real data. Self-IMproving diffusion models with Synthetic data (SIMS) is a new training concept for diffusion models that uses self-synthesized data to provide negative guidance during the generation process to steer a model's generative process away from the non-ideal synthetic data manifold and towards the real data distribution. We demonstrate that SIMS is capable of self-improvement; it establishes new records based on the Fréchet inception distance (FID) metric for CIFAR-10 and ImageNet-64 generation and achieves competitive results on FFHQ-64 and ImageNet-512. Moreover, SIMS is, to the best of our knowledge, the first prophylactic generative AI algorithm that can be iteratively trained on self-generated synthetic data without going MAD. As a bonus, SIMS can adjust a diffusion model's synthetic data distribution to match any desired in-domain target distribution to help mitigate biases and ensure fairness.

The figure above illustrates that SIMS simultaneously improves diffusion modeling and synthesis performance while acting as a prophylactic against Model Autophagy Disorder (MAD). First row: Samples from a base diffusion model (EDM2-S) trained on 1.28M real images from the ImageNet-512 dataset (Fréchet inception distance, FID = 2.56). Second row: Samples from the base model after fine-tuning with 1.5M images synthesized from the base model, which degrades synthesis performance and pushes the model towards MADness (model collapse) (FID = 6.07). Third row: Samples from the base model after applying SIMS using the same self-generated synthetic data as in the second row (FID = 1.73).

To help organize the growing literature on AI self-consuming feedback loops, we have launched a "Self-Consuming AI Resources" archive at dsp.rice.edu/ai-loops.

To help organize the growing literature on AI self-consuming feedback loops, we have launched a "Self-Consuming AI Resources" archive at dsp.rice.edu/ai-loops.