A Visual Tour of Current Challenges in Multimodal Language Models

Shashank Sonkar, Naiming Liu, Richard G. Baraniuk

arXiv preprint 2210.12565

October 2022

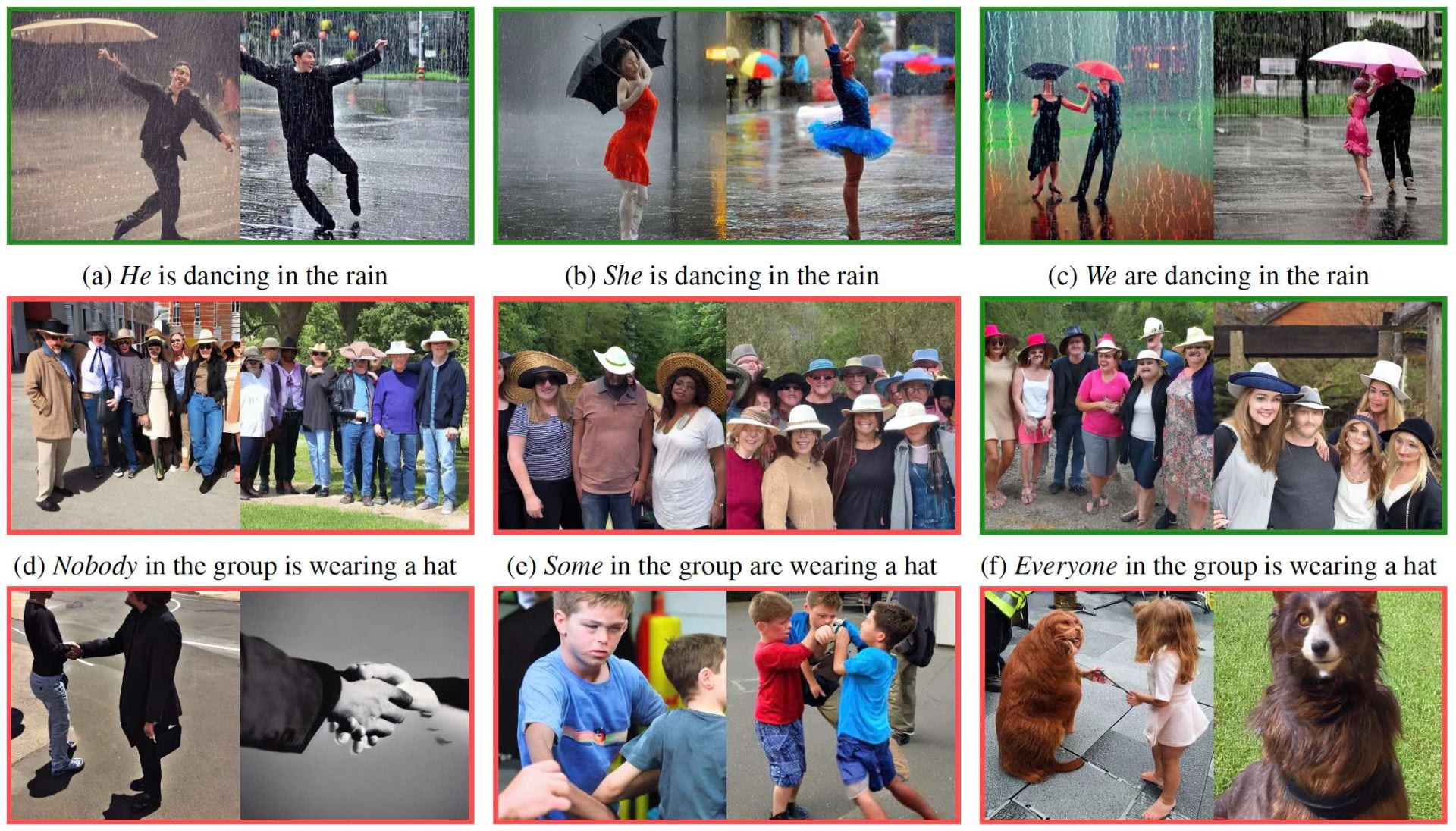

Transformer models trained on massive text corpora have become the de facto models for a wide range of natural language processing tasks. However, learning effective word representations for function words remains challenging. Multimodal learning, which visually grounds transformer models in imagery, can overcome the challenges to some extent; however, there is still much work to be done. In this study, we explore the extent to which visual grounding facilitates the acquisition of function words using stable diffusion models that employ multimodal models for text-to-image generation. Out of seven categories of function words, along with numerous subcategories, we find that stable diffusion models effectively model only a small fraction of function words – a few pronoun subcategories and relatives. We hope that our findings will stimulate the development of new datasets and approaches that enable multimodal models to learn better representations of function words.

Above: Sample images depicting an SDM's success (green border) and failure (red border) in capturing the semantics of different subcategories of pronouns. (a)-(c) show that the information about gender and count implicit in subject pronouns like he, she, we is accurately depicted. But, for indefinite pronouns, SDMs fail to capture the notion of negatives ((d) nobody), existential ((e) some), and universals ((f) everyone). Likewise SDMs fail to capture the meaning of reflexive pronouns such as (g) myself, (h) himself, (i) herself.

We provide the code on github for readers to replicate our findings and explore further.