DSP PhD student Tan Nguyen has received a prestigious Computing Innovation Postdoctoral Fellowship (CIFellows Program) from the Computing Research Association (CRA). He plans to work with Professor Stan Osher at UCLA on predicting drug-target binding affinity to study how current drugs work on new targets as a treatment for COVID-19 and future pandemic diseases. Tan plans to develop a new class of deep learning models that are aware of the structural information of drugs, scalable to large datasets, and generalizable to unseen cases.

DSP PhD student Tan Nguyen has received a prestigious Computing Innovation Postdoctoral Fellowship (CIFellows Program) from the Computing Research Association (CRA). He plans to work with Professor Stan Osher at UCLA on predicting drug-target binding affinity to study how current drugs work on new targets as a treatment for COVID-19 and future pandemic diseases. Tan plans to develop a new class of deep learning models that are aware of the structural information of drugs, scalable to large datasets, and generalizable to unseen cases.

Uncategorized

DSP Alum Mark Davenport Selected as Rice Outstanding Young Engineering Alumnus

Mark Davenport (PhD, 2010) as been selected as a Rice Outstanding Young Engineering Alumnus. The award, established in 1996, recognizes achievements of Rice Engineering Alumni under 40 years old. Recipients are chosen by the George R. Brown School of Engineering and the Rice Engineering Alumni (REA).

Mark Davenport (PhD, 2010) as been selected as a Rice Outstanding Young Engineering Alumnus. The award, established in 1996, recognizes achievements of Rice Engineering Alumni under 40 years old. Recipients are chosen by the George R. Brown School of Engineering and the Rice Engineering Alumni (REA).

Mark is an Associate Professor of Electrical and Computer Engineering at Georgia Tech. His many other honors include the Hershel Rich Invention Award and Budd Award for best engineering thesis at Rice, a NSF Math Sciences postdoc fellowship, NSF CAREER Award, AFOSR YIP Award, Sloan Fellow, and PECASE.

Mark spent time at Rice in winter 2020 as the Texas Instruments Visiting Professor.

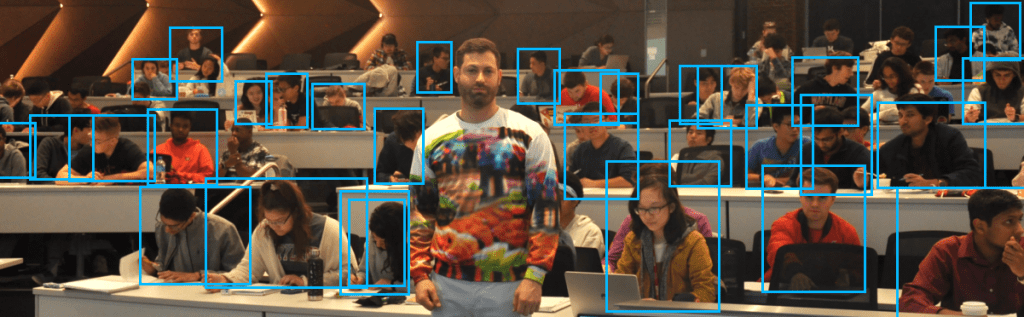

Adversarial Clothing

DSP postdoc alum Thomas Goldstein has launched a new clothing line that evades detection by machine learning vision algorithms.

This stylish pullover is a great way to stay warm this winter, whether in the office or on-the-go. It features a stay-dry microfleece lining, a modern fit, and adversarial patterns the evade most common object detectors. In this demonstration, the YOLOv2 detector is evaded using a pattern trained on the COCO dataset with a carefully constructed objective.

Paper: Making an Invisibility Cloak: Real World Adversarial Attacks on Object Detectors by Z. Wu, S-N. Lim, L. Davis, Tom Goldstein, October 2019

New Yorker article: Dressing for the Surveillance Age

Rice DSP to Lead ONR MURI on Foundations of Deep Learning

Rice DSP group faculty Richard Baraniuk will be leading a team of engineers, computer scientists, mathematicians, and statisticians on a five-year ONR MURI project to develop a principled theory of deep learning based on rigorous mathematical principles. The team includes:

- Richard Baraniuk, Rice University (project director)

- Moshe Vardi, Rice University

- Ronald DeVore, Texas A&M University

- Stanley Osher, UCLA

- Thomas Goldstein, University of Maryland

- Rama Chellappa, University of Maryland

- Ryan Tibshirani, Carnegie Mellon University

- Robert Nowak, University of Wisconsin

International collaborators include the Alan Turing and Isaac Newton Institutes in the UK.

Scheduled Restart Momentum for Accelerated Stochastic Gradient Descent

B. Wang*, T. M. Nguyen*, A. L. Bertozzi***, R. G. Baraniuk**, S. J. Osher**. "Scheduled Restart Momentum for Accelerated Stochastic Gradient Descent", arXiv, 2020.

Gihub code: https://github.com/minhtannguyen/SRSGD.

Blog: http://almostconvergent.blogs.rice.edu/2020/02/21/srsgd.

Slides: SRSGD

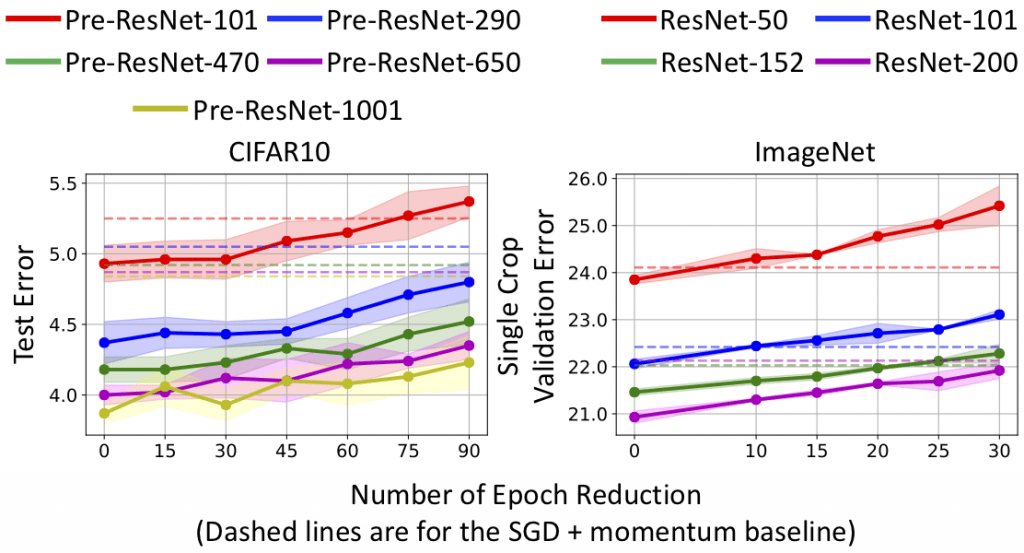

Stochastic gradient descent (SGD) with constant momentum and its variants such as Adam are the optimization algorithms of choice for training deep neural networks (DNNs). Since DNN training is incredibly computationally expensive, there is great interest in speeding up convergence. Nesterov accelerated gradient (NAG) improves the convergence rate of gradient descent (GD) for convex optimization using a specially designed momentum; however, it accumulates error when an inexact gradient is used (such as in SGD), slowing convergence at best and diverging at worst. In this paper, we propose Scheduled Restart SGD (SRSGD), a new NAG-style scheme for training DNNs. SRSGD replaces the constant momentum in SGD by the increasing momentum in NAG but stabilizes the iterations by resetting the momentum to zero according to a schedule. Using a variety of models and benchmarks for image classification, we demonstrate that, in training DNNs, SRSGD significantly improves convergence and generalization; for instance in training ResNet200 for ImageNet classification, SRSGD achieves an error rate of 20.93% vs. the benchmark of 22.13%. These improvements become more significant as the network grows deeper. Furthermore, on both CIFAR and ImageNet, SRSGD reaches similar or even better error rates with fewer training epochs compared to the SGD baseline.

Figure 1: Error vs. depth of ResNet models trained with SRSGD and the baseline SGD with constant momemtum. Advantage of SRSGD continues to grow with depth.

Figure 2: Test error vs. number of epoch reduction in CIFAR10 and ImageNet training. The dashed lines are test errors of the SGD baseline.

* : Co-first authors; **: Co-last authors; ***: Middle author

Two Papers at AISTATS 2020

The DSP group will present two papers at the International Conference on Artificial Intelligence and Statistics (AISTATS) conference in June 2020 in Palermo, Sicily, Italy

The DSP group will present two papers at the International Conference on Artificial Intelligence and Statistics (AISTATS) conference in June 2020 in Palermo, Sicily, Italy

- D. LeJeune, H. Javadi, R. G. Baraniuk, "The Implicit Regularization of Ordinary Least Squares Ensembles," AISTATS, 2020

- D. LeJeune, G. Dasarathy, R. G. Baraniuk, "Thresholding Graph Bandits with GrAPL," AISTATS, 2020

DSP Alum Faculty On the Move

In Fall 2019, Chinmay Hegde (PhD 2012) moved from Iowa State University to New York University (NYU).

In Fall 2019, Vinay Ribeiro (PhD 2005) moved from IIT-Delhi to IIT-Bombay.

In Summer 2020, Christoph Studer (postdoc 2010-12) will move from Cornell University to ETH-Zurich.

Rice DSP Papers and Workshops at NeuIPS 2019

Rice DSP will again be well-represented at NeurIPS 2019 in Vancouver, Canada

- R. Balestriero, R. Cosentino, B. Aazhang, R. G. Baraniuk, "The Geometry of Deep Networks: Power Diagram Subdivision," Neural Information Processing Systems (NeurIPS), 2019.

- Y. Huang, S. Dai, T. M. Nguyen, P. Bao, D.Y. Tsao, R. G. Baraniuk, A. Anandkumar, "Brain-Inspired Robust Vision using Convolutional Neural Networks with Feedback," Neuroscience<->AI Workshop, NeurIPS, 2019.

- G. Portwood, P. Mitra, M. D. Ribeiro, T. M. Nguyen, B. Nadiga, J. Saenz, M. Chertkov, A. Garg, A. Anandkumar, A. Dengel, R. G. Baraniuk, D. Schmidt. "Turbulence Forecasting via NeuralODE," Machine Learning and the Physical Sciences Workshop, NeurIPS, 2019.

- Y. Huang, S. Dai, T. M. Nguyen, P. Bao, D.Y. Tsao, R. G. Baraniuk, A. Anandkumar. "Out-of-Distribution Detection using Neural Rendering Models," Women in Machine Learning Workshop, NeurIPS, 2019

- D. Brenes, C. Barberan, B. Hunt, R. G. Baraniuk, R. Richards-Kortum, "Multi-Task Deep Learning Model for Improved Histopathology Prediction from In-Vivo Microscopy Images," LatinX Workshop, NeurIPS 2019

-

Co-organizing: NeurIPS Workshop on Solving Inverse Problems with Deep Networks, 2019

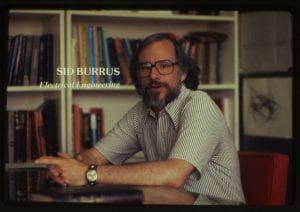

The Philosopher Engineer

An enlightening profile of Dean and ECE Professor Emeritus and Rice DSP Group co-founder C. Sidney Burrus has appeared in the 24 October 2019 edition of the Rice Magazine.

Christoph Studer Tenured at Cornell

DSP alum Christoph Studer (postdoc 2010-12) has been awarded tenure at Cornell University. Christoph is an expert in signal processing, communications, machine learning, and their implementation in VLSI circuits. He has received a Swiss National Science Foundation postdoc fellowship, a US NSF CAREER Award, and numerous best paper awards. He is still is not a fan of Blender.

DSP alum Christoph Studer (postdoc 2010-12) has been awarded tenure at Cornell University. Christoph is an expert in signal processing, communications, machine learning, and their implementation in VLSI circuits. He has received a Swiss National Science Foundation postdoc fellowship, a US NSF CAREER Award, and numerous best paper awards. He is still is not a fan of Blender.